CPU Magic: Transistors to Execution Pipeline Mastery

Introduction

CPU: The digital landscape we inhabit today is powered by an unseen force that drives our devices and fuels our digital experiences. Behind the sleek screens and seamless interfaces lies the intricate realm of CPU architecture – the beating heart of computing. This article takes you on a captivating journey through the evolution and mechanics of CPU architecture, unveiling the magic that transforms transistors into the symphony of execution pipelines.

Setting the Stage: The Importance of CPU Architecture

Picture a world without CPUs – the brains of our machines. They are the unsung heroes that decipher our commands and orchestrate the complex dance of digital operations. To understand this enigmatic technology is to glimpse the essence of modern life.

A Journey into the Heart of Computing

Delve into the core of computing, and you’ll find yourself in a realm of transistors, pathways, and calculations that transform raw data into meaningful actions. This journey takes us from the earliest days of computing to the cutting-edge innovations that shape our future.

Section 1: The Building Blocks

The Marvelous World of Transistors

Step into the realm of transistors – the tiny switches that spark the magic of computing. These silicon wonders form the foundation of modern electronics, enabling us to manipulate and store information with breathtaking precision.

Exploring the Transistor Revolution

Uncover the astonishing history of transistors, from their discovery to the revolution they sparked in the world of electronics. Witness the transformation of bulky vacuum tubes into minuscule transistors that unlocked the digital age.

How Transistors Enable Digital Computing

Unlock the mystery of how transistors convert analog signals into digital code, paving the way for binary operations that power modern computing. Witness the elegance of this transformation that occurs billions of times per second.

From Gates to Circuits: Building Logic Blocks

Dive deeper into the world of logic gates – the building blocks of computation. These gates perform logical operations that form the basis of all digital operations, turning 1s and 0s into meaningful outcomes.

AND, OR, NOT Gates: The Basics

Demystify the essential logic gates – AND, OR, and NOT. These gates act as the language of computers, enabling them to perform intricate calculations and comparisons with astonishing speed.

Combining Gates into Logical Circuits

Witness the marriage of logic gates to create logical circuits that execute complex tasks. Explore how these circuits form the fundamental pathways that process data and execute instructions.

Section 2: Inside the CPU

CPU Components Demystified

Peek inside the CPU and unveil its intricate components that collaborate to perform a symphony of operations. These components include the control unit, arithmetic logic unit (ALU), and registers.

Control Unit: Orchestrating the Symphony

Discover the maestro of the CPU – the control unit. This conductor interprets instructions, coordinates actions, and ensures that the show runs smoothly, instructing every component when to play its part.

Arithmetic Logic Unit (ALU): Crunching Numbers

Meet the mathematical powerhouse of the CPU – the ALU. It performs arithmetic and logical operations that transform data into meaningful results, whether you’re adding numbers or making decisions.

Registers: Temporary Data Storage

Unveil the fast and fleeting memory of the CPU – registers. These temporary storage units hold data that needs to be manipulated or processed, ensuring that the right information is always at hand.

Section 3: CPU Architecture Evolution

Early Days: Single Accumulator Architecture

Step back in time to the birth of CPUs, where the single accumulator architecture ruled. Explore the simplicity of this approach and how it paved the way for the computing marvels of today.

Understanding the Von Neumann Architecture

Embark on a journey through the groundbreaking Von Neumann architecture – a blueprint that separates data and instructions, allowing for flexible and efficient computing.

Complex Dreams: Introduction of Microarchitecture

Witness the dawn of microarchitecture, where CPUs embraced complexity and specialization. Dive into the intricacies of microarchitecture and how it revolutionized computing performance.

CISC vs. RISC: The Great Debate

Navigate the contrasting worlds of Complex Instruction Set Computing (CISC) and Reduced Instruction Set Computing (RISC). Uncover the advantages and trade-offs of each approach in the pursuit of CPU efficiency.

Superscalar and VLIW Architectures

Explore the cutting edge with superscalar and Very Long Instruction Word (VLIW) architectures. Discover how these architectures amplify CPU performance by executing multiple instructions in parallel.

Section 4: Pipeline Performance

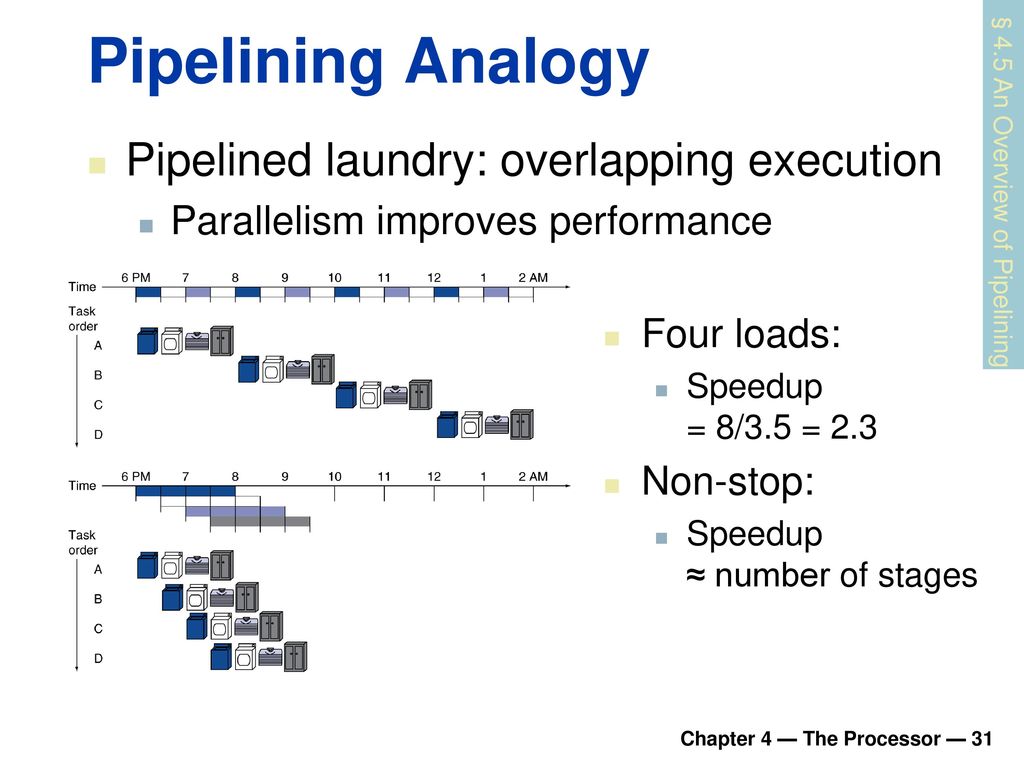

From Single to Multi-Stage Pipelines

Experience the leap from single-stage to multi-stage pipelines – a breakthrough that turbocharged CPU performance. Witness how breaking down instruction execution into stages optimized efficiency.

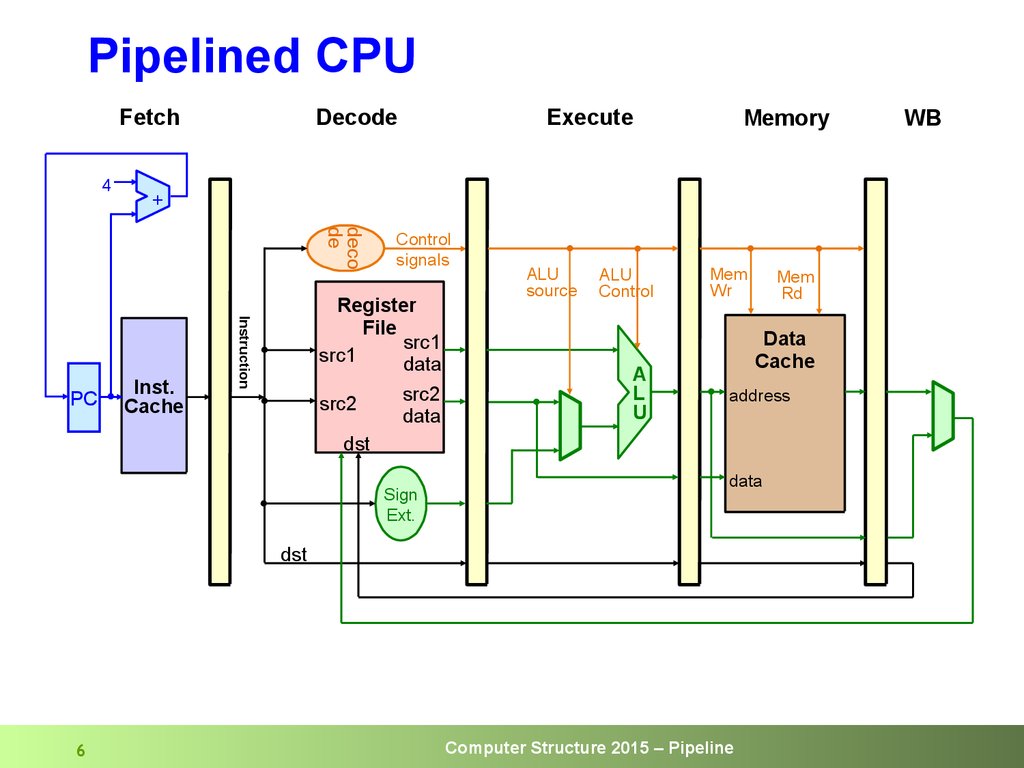

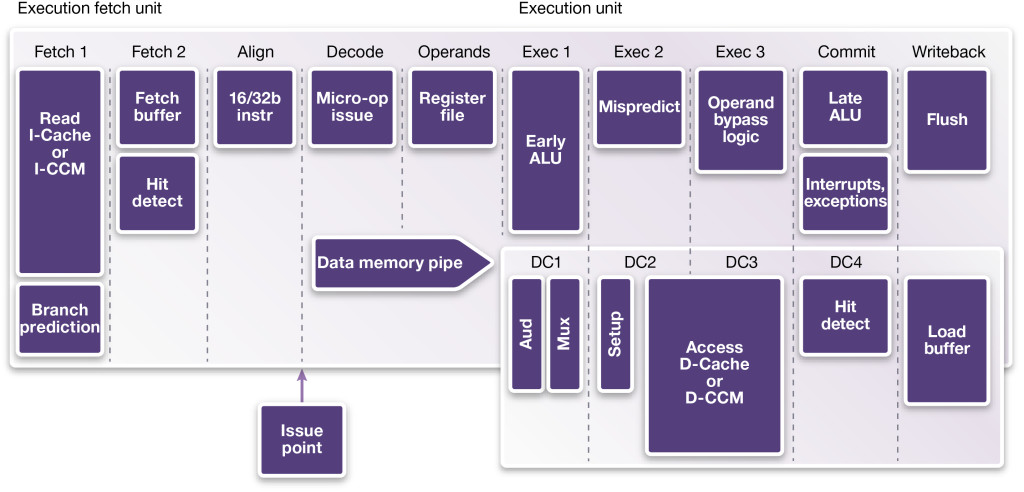

Breaking Down Instruction Execution

Unveil the intricate process of instruction execution. Explore how instructions are fetched, decoded, executed, and written back – a carefully choreographed dance that brings software to life.

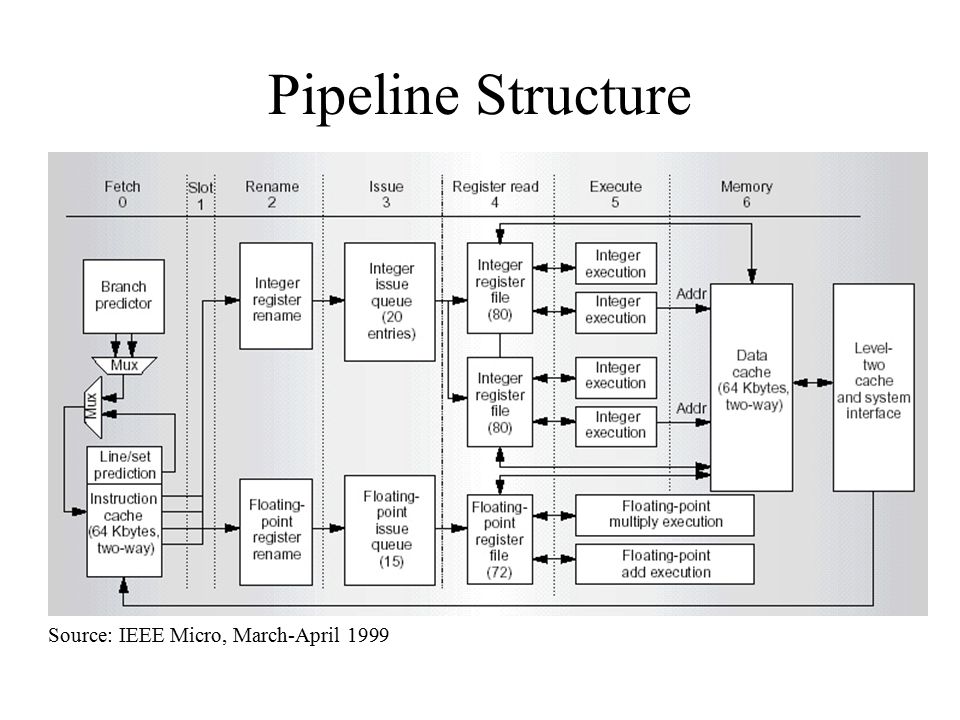

Pipeline Stages: Fetch, Decode, Execute, Writeback

Peek behind the curtain of each pipeline stage. From fetching instructions to writing back results, understand how each stage contributes to seamless and efficient CPU operation.

The Pipeline Bottleneck: Hazards and Solutions

Face the challenges that come with pipeline operation – hazards that slow down the CPU’s rhythm. Discover techniques like data forwarding and hazard prediction that keep the pipeline flowing.

Data Hazards and Forwarding

Dive into the world of data hazards, where conflicts over data dependencies threaten pipeline harmony. Learn how forwarding mechanisms solve these challenges, enabling uninterrupted execution.

Control Hazards and Branch Prediction

Uncover the complexity of control hazards, where branch instructions disrupt the flow. Explore branch prediction techniques that anticipate the outcome, keeping the pipeline humming.

Section 5: Deep Dive into Pipeline Stages

Fetch Stage: Bringing Instructions Home

Witness the inception of instruction execution with the fetch stage. Learn about instruction pointers, program counters, and the magic of instruction caching that speeds up the process.

Instruction Cache and Prefetching

Delve into the significance of instruction caching and prefetching. See how CPUs predict which instructions to fetch, ensuring that the right code is ready when needed.

Decode Stage: Cracking the Instruction Code

Unlock the secrets of the decode stage, where instructions are decoded into understandable actions. Delve into instruction formats, differences between RISC and CISC, and the role of operand forwarding.

Section 5: Deep Dive into Pipeline Stages (Continued)

Execute Stage: Where the Action Happens

Step into the spotlight of the execute stage – where instructions come to life. Witness the ALU’s role in performing arithmetic, logic, and other operations critical to software execution.

ALU Operations: Arithmetic, Logic, and Beyond

Unleash the power of the Arithmetic Logic Unit (ALU). From basic addition to complex logical operations, explore how the ALU transforms data, making computations a reality.

Handling Data Dependencies and Out-of-Order Execution

Delve into the challenges of data dependencies that hinder pipeline flow. Discover how out-of-order execution reorders instructions to maximize CPU utilization and performance.

Writeback Stage: Sealing the Deal

Witness the grand finale of instruction execution – the writeback stage. Understand how the CPU updates registers and flags, finalizing the results for future operations.

Updating Registers and Flags

Peek into the moment when registers receive their updated values. Learn about flags that signal conditions like overflow or zero results, shaping the CPU’s next moves.

Handling Multiple Instruction Results

Discover the intricacies of managing multiple instruction results. Uncover how the writeback stage ensures that every result finds its rightful place, enabling continuous operation.

Section 6: Achieving Parallelism

Understanding Instruction-Level Parallelism (ILP)

Enter the realm of instruction-level parallelism, where CPUs juggle multiple instructions simultaneously. Witness the magic of pipelining and superscalar execution.

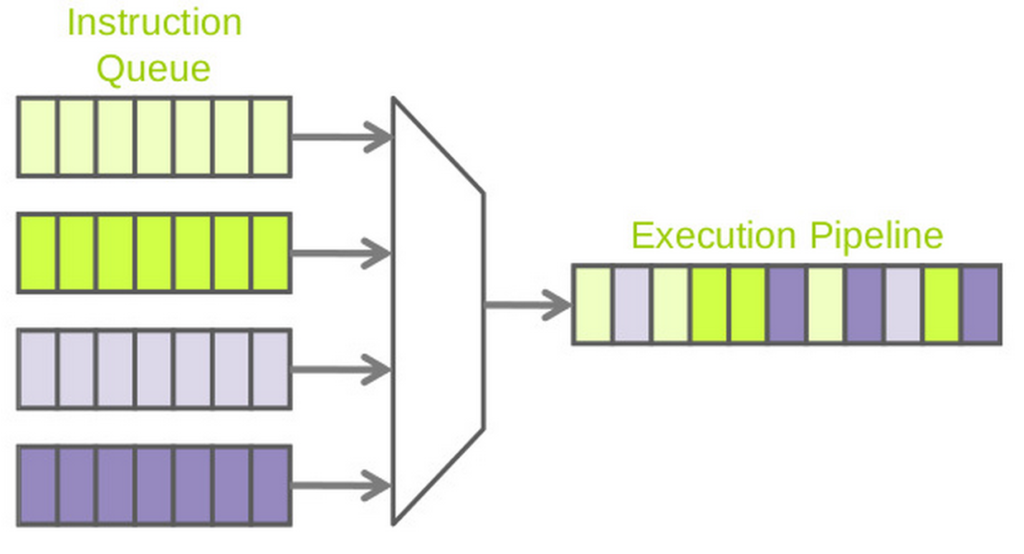

Pipelining and Superscalar Execution

Explore pipelining’s role in breaking down instructions into stages. Dive into superscalar execution, where multiple pipelines work in harmony, delivering impressive performance gains.

Speculative Execution: Risks and Rewards

Unveil the concept of speculative execution – a technique that predicts future instructions to optimize performance. Learn how it offers rewards while also posing security challenges.

Multithreading: Tackling Multiple Tasks

Embrace the art of multithreading, where CPUs tackle various tasks concurrently. Dive into simultaneous multithreading (SMT) and Intel’s innovative hyperthreading, maximizing resource utilization.

Section 7: Modern CPU Architectures

Out-of-Order Execution and Speculative Execution

Peek into the future with out-of-order execution and speculative execution. See how CPUs predict and rearrange instructions to extract more performance from each clock cycle.

How CPUs Predict and Execute Ahead

Explore the science behind CPU predictions and execution ahead of time. Understand how this innovation optimizes performance by keeping the CPU busy.

Meltdown, Spectre, and Security Concerns

Face the dark side of speculative execution – vulnerabilities like Meltdown and Spectre. Discover the security concerns that arise from this powerful technique.

Branch Prediction: Looking into the Crystal Ball

Witness the marvel of branch prediction, where CPUs anticipate which direction a branch instruction will take. Dive into static vs. dynamic prediction techniques and the role of buffers.

Static vs. Dynamic Prediction Techniques

Compare the pros and cons of static and dynamic branch prediction methods. Uncover how CPUs use historical data to make accurate predictions, reducing pipeline stalls.

Branch Target Buffer and Pattern History Table

Explore the inner workings of the branch target buffer and pattern history table. See how these components improve the accuracy of branch prediction, enhancing CPU efficiency.

Section 8: Beyond the Basics

SIMD and VLIW Architectures

Embark on a journey into parallelism with SIMD (Single Instruction, Multiple Data) and VLIW (Very Long Instruction Word) architectures. Uncover how these architectures accelerate tasks like media processing.

Utilizing Parallelism for Media Processing

Unlock the power of parallelism in media processing tasks. Discover how CPUs leverage SIMD and VLIW architectures to process vast amounts of data in parallel, transforming media experiences.

Challenges and Benefits of VLIW

Delve into the challenges and benefits of Very Long Instruction Word (VLIW) architectures. Understand how VLIW maximizes performance through careful instruction scheduling.

Neuromorphic and Quantum Architectures

Peer into the future of CPU architecture with neuromorphic and quantum designs. Explore unconventional paradigms that promise to revolutionize computing as we know it.

Exploring Unconventional Computing Paradigms

Venture into the realm of neuromorphic and quantum architectures. Embrace computing paradigms inspired by the human brain and the astonishing world of quantum mechanics.

Section 9: Future Horizons

The Road Ahead: Trends and Innovations

Glimpse into the crystal ball to anticipate the future of CPU architecture. Explore emerging trends and innovations that promise to shape the computing landscape.

AI-Optimized Architectures

Uncover how AI is driving architectural innovation. Witness the rise of specialized architectures optimized for artificial intelligence and machine learning workloads.

Customization and Domain-Specific CPUs

Peek into the future where customization rules. Explore domain-specific CPUs tailored for specific tasks, ushering in a new era of efficiency and performance.

Ethics and Sustainability in CPU Design

Navigate the ethical considerations of CPU design. Discover the balance between performance and energy efficiency, as well as the growing concerns of e-waste and recycling.

Balancing Performance with Energy Efficiency

Contemplate the delicate balance between performance and energy consumption. Understand how CPUs of the future must optimize both to ensure sustainable computing.

E-Waste and Recycling Challenges

Face the environmental challenges posed by e-waste. Learn how responsible design and recycling practices are becoming integral to shaping the future of CPU architecture.

Conclusion

Reflecting on the CPU Architectural Odyssey

Take a moment to reflect on the remarkable journey through CPU architecture. From transistors to execution pipelines, witness how this field has transformed the world.

Embracing the Beauty of Computing’s Core

As you conclude this journey, embrace the intricate beauty of CPU architecture. Recognize the marvels that enable the digital world to flourish and the limitless potential that lies ahead.

Frequently Asked Questions (FAQ) – Understanding CPU Architecture: From Transistors to Execution Pipeline Mastery

- What is CPU architecture? CPU architecture refers to the design and organization of a central processing unit (CPU), which is the brain of a computer. It involves understanding how various components work together to execute instructions and process data.

- Why is CPU architecture important? CPU architecture determines the performance, speed, and capabilities of a computer. It influences how efficiently a CPU can process instructions and data, directly impacting the overall computing experience.

- What are transistors, and how do they relate to CPUs? Transistors are tiny semiconductor devices that act as switches, controlling the flow of electrical signals. In CPUs, transistors are the building blocks that enable digital computations and logic operations.

- How do transistors enable digital computing? Transistors use binary signals (0s and 1s) to represent data and instructions. By arranging transistors in specific configurations, CPUs can perform complex calculations and logical operations using these binary signals.

- What are logic gates? Logic gates are fundamental building blocks that perform logical operations, such as AND, OR, and NOT. These gates are combined to create logical circuits that execute various tasks within a CPU.

- What is the control unit in a CPU? The control unit is responsible for coordinating and directing the operations of a CPU. It interprets instructions, manages data flow, and ensures that different components work together seamlessly.

- What is the role of the Arithmetic Logic Unit (ALU)? The ALU is responsible for performing arithmetic calculations (like addition and subtraction) and logical operations (like comparisons) required for data manipulation and decision-making.

- What are registers in a CPU? Registers are small, fast storage units within a CPU used to hold data temporarily during processing. They play a crucial role in executing instructions and managing data flow.

- What is the Von Neumann architecture? The Von Neumann architecture is a foundational concept in computer design where data and instructions are stored in the same memory, allowing for seamless manipulation and execution.

- What is pipelining in CPU architecture? Pipelining is a technique where the instruction execution process is divided into stages, allowing multiple instructions to be processed simultaneously in different stages of the pipeline.

- What are hazards in pipeline execution? Hazards in pipeline execution refer to situations where dependencies between instructions can cause delays or errors in the pipeline flow. These include data hazards and control hazards.

- How does branch prediction work? Branch prediction is a technique used to guess the outcome of a conditional branch instruction before it is actually known. It helps keep the pipeline filled with instructions, reducing stalls.

- What is out-of-order execution? Out-of-order execution is a strategy where a CPU may rearrange the order of instruction execution to maximize pipeline utilization and overall performance.

- What is speculative execution? Speculative execution is a technique where the CPU predicts and executes instructions ahead of time based on probabilities, aiming to improve performance. However, it can also introduce security vulnerabilities.

- What are SIMD and VLIW architectures? SIMD (Single Instruction, Multiple Data) and VLIW (Very Long Instruction Word) architectures are designs that enable processors to perform multiple operations in parallel, enhancing performance for specific tasks like media processing.

- What is multithreading in CPU architecture? Multithreading is the concept of executing multiple threads or tasks concurrently within a single CPU core. It maximizes resource utilization and enhances overall system responsiveness.

- What are AI-optimized architectures? AI-optimized architectures are specialized designs tailored to efficiently handle artificial intelligence and machine learning workloads. They leverage hardware acceleration to achieve faster AI computations.

- How does CPU architecture contribute to sustainability? Modern CPU designs focus on balancing performance with energy efficiency to reduce power consumption. Additionally, ethical considerations and recycling practices play a role in sustainable CPU design.

- What’s the future of CPU architecture? The future of CPU architecture involves trends like domain-specific CPUs, customization, and continued innovation for AI and quantum computing. The field is poised to reshape how we experience computing.

- What’s the takeaway from understanding CPU architecture? Understanding CPU architecture demystifies the technology powering our digital lives. It highlights the intricate dance of transistors, pipelines, and parallelism that enables the devices and experiences we rely on daily.

As we navigate the realm of CPU architecture – from the ingenious transistors that initiate the magic, to the intricate execution pipelines that orchestrate the show – it becomes clear that each component plays a pivotal role in the grand symphony of computing. Delving into the control unit, ALU, and registers within the CPU itself unlocks a deeper appreciation for the coordinated dance that transforms instructions into actions. Explore our section on Data Revolution to uncover the hidden wonders that drive our devices.

For those hungry to delve even deeper into the captivating world of CPU architecture, high-quality resources await beyond these virtual pages. Dive into an insightful article on CPU Pipelining: How It Works and Why It’s Important from Tech Target, where they explore the essence of pipeline stages and how they revolutionize instruction execution. To gain a comprehensive view of the future of CPU architecture, check out Emerging Trends in CPU Design at Jaro Education, where they discuss trends like neuromorphic architectures and ethical considerations in CPU design.

Uma resposta